DIT University, Dehradun 248009

Corresponding author Email: anujbit@gmail.com

Article Publishing History

Received: 01/04/2019

Accepted After Revision: 01/06/2019

Cloud computing is a revolution nowadays, which changes the ways in which the IT-based services are being delivered to end users. It is growing or we can also say grown-up technology that offers many benefits whether in terms of economy or in terms of cost-effective resource utilization. Cloud services are being offered via data centres situated at various locations and these data centres make use of virtualization that leads to various benefits to end users. All the Giants in the field of cloud computing like Google, Amazon, and Microsoft rely totally on the data centres to fulfil the dynamic demand raised by the services. However, all the benefits of cloud-based services lead to various energy-related issues. Energy efficiency is very important from the future perspective of information communication technology (ICT). So nowadays lots of efforts are being made towards minimizing the power consumption of data centres. In the present paper, all the issues related to energy efficiency discussed and investigated. The main aim of the current paper is to study the various energy efficient techniques for the data centres and compare these approaches, to do so data has been used from various research articles, papers, and internet.

Cdc, DVFS, Energy Saving, Energy Consumption, Power Saving, QoS.

Yadav A. K, Garg M. L, Ritika. The Issues of Energy Efficiency in Cloud Computing Based Data Centers. Oryzae.Biosc.Biotech.Res.Comm. 2019;12(2).

Yadav A. K, Garg M. L, Ritika. The Issues of Energy Efficiency in Cloud Computing Based Data Centers. Oryzae. Biosc.Biotech.Res.Comm. 2019;12(2). Available from: https://bit.ly/31LfMnv

Copyright © Yadav et al., This is an open access article distributed under the terms of the Creative Commons Attribution License (CC-BY) https://creativecommns.org/licenses/by/4.0/, which permits unrestricted use distribution and reproduction in any medium, provide the original author and source are credited.

Introduction

Cloud computing can be considered as a model that deliver services and resources dynamically over the internet as per the requirement of end users . It provides the option to the end user to transfer their work and services into the cloud and use these services around the globe whenever required with the help of internet connectivity. By this, way end user able to use the quality of service in a reliable and efficient manner ( Tianfield et al, 2018)

With the invention of cloud-based services, remote collaboration becomes very easy and a large number of users can work in a collaborative manner (Damien et al, 2011). The end user can use applications as well as storage through cloud-based services, as the cloud-based services delivered as platform-based services, Infrastructure based service and software-based services. These models known as service delivery models. All these delivery models deployed as a public cloud, private cloud or as a hybrid cloud (YI et al, 2018).

Cloud computing makes use of virtualization for using the software and hardware in an efficient way. The idea behind it is to deliver the services to the end users based on their demand and the user just need to pay according to the usage. Resources are available in the large amount and all these resources are available to use by the users (Yadav et al, 2019). These are some basic characteristics of cloud-based services. The evolution of cloud computing revolutionizes the owner based approach to the subscription-based approach, by delivering the services on demand and in scalable fashion (Kotas et al, 2018).

Nowadays there are many cloud service providers, which provides cloud resources. These resources may be hardware based or software based resources, and these resources are used by the variety of users on pay as you use basis (Yadav et al 2019).

All the giants of cloud-based service providers (Amazon, Google, Microsoft, Sun, IBM etc) expanding their work by building their data centers across the globe (Mell et al, 2011).. All data centers are used to host the cloud-based applications, which can be categorized according to the requirements; some of them can be viewed as business applications, gaming services, scientific data processing, and multimedia information delivery (Qureshi et al, 2018).

In addition, to run these data centers enormous amount of energy or power is needed (Clark et al, 2005). The power needed for network devices, monitors, cooling fans, monitors, air conditioners etc. This power demand is increasing day by day as in 2012 power consumption of data centers across the globe is 65% greater than its previous year. Moreover, nowadays it’s more than 100% (Andy et al, 2008).

In spite of the fact that cloud-based services gives financial benefits as discussed above, there are some concerns as well. These concerns are due to large power consumption and emission of carbon, and that has become a serious concern. Data centers used to store a large amount of data in the cloud and to do so heavy amount of energy is used in form of heat. Moreover, nowadays a serious concern around the globe is global warming, so we can say cloud-based services may be view as one of the source that leads to global warming because there are many gases (carbon dioxide, carbon monoxide etc) released while using cloud-computing services (Markandey et al, 2018).

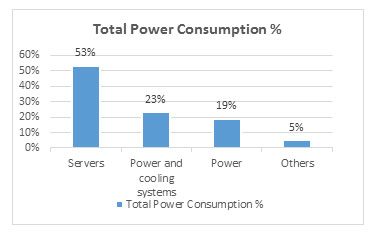

So energy consumption is one of the major areas of concern in service distribution of cloud computing. The energy and power requirement of data centres is very high, as data centres use this for the computation and cooling. Due to this energy, cost becomes very high and there is large emission of carbon. The energy consumption of data centers situated worldwide calculated as 1.4 % of total EEC (electrical energy consumption) and it is growing every year at a rate of 12%. A datacentre situated in Barcelona pays around £1 million for the power consumption of 1.2 MV, which used for around 1200 houses power consumption (Toress et al, 2018). If energy and power consumption of data center reduced, it will not only benefit the global warming vice but also as economy wise and that would be a great contribution to environmental sustainability. As per the estimation of Amazon.com and their data centers, the total cost of operations of servers is around 53% of total budget and cost related to energy comes out to be 42% of total cost as shown in Table 1.1 and chart. This energy consumption cost includes power required to working of systems as well as total cooling infrastructure (Hamilton et al, 2009).

Table 1.1: Energy distribution in the data center

| Factor | Total Power Consumption % |

| Servers | 53% |

| Power and cooling systems | 23% |

| Power | 19% |

| Others | 5% |

|

Figure 2.1 Data Centre Vs Cloud Infrastructure |

As energy plays an important role in global warming-related issues, if energy consumption gets reduced by data centers it will be best for the climate-related issues. Therefore, energy minimization will lead to improvement in productivity and reliability of the system. And energy minimization not only reduces the cost but it will also be useful in protecting the environment. So the reduction of energy consumption of the cloud data center and cloud computing systems is a challenge and it is growing rapidly with time. To counter this situation effective measure must be taken so that environment-related issues could be addressed well within time. In this paper energy, related issues discussed so that data center based cloud computing results become environment-friendly. Remaining paper is organized as in section 2 differences between cloud infrastructure and a data center pointed out; in section, 3 energy consumption issues of data centers are discussed. In section 4 points regarding sustainable energy pointed out, section 5 constitute the information about the factors that lead towards energy consumption, section 6 contains the approaches used by various researchers for energy efficiency in data centers. Finally, the paper concludes in section 7 with the conclusion.

Data Centre Vs Cloud Infrastructure

The data center is a facility that has a collection of servers, routers, switches, and other computing-related devices. In addition to this data center also have different components that are required to run a data center and these components comprise of backup systems, power supply systems, security-related devices etc. data center is a solution where there is a large collection of software and hardware resources. These resources are placed at a certain location and are intact of each other. In today’s IT enterprise world, term data center ignites the business applications (Cisco Web, 2018).

Another side cloud is a virtual established infrastructure, which is available and provides services locally or remotely with the help of network connections. Within the range of cloud, computing environment users can access the variety of resources with ease. The difference between two summarized in table2.1 given below

Table 2.1

| On Premise Data Center | Cloud based Infrastrucrre |

| Cost is high and less scalability | Not costly , used needs to pay according to usage |

| Less flexible | Largely flexible and customizable |

| Not remote access of data | Data can be access from any location |

| Implementation takes lots of time | Can be implemented in less time |

| No automatic updates | Updates on regular basis |

| Need specialized members for maintenance | Manage and maintain by the provider |

|

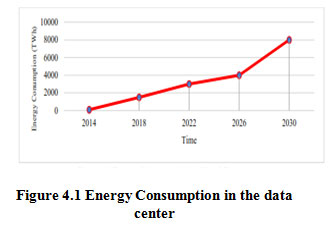

Figure 4.1: Energy Consumption in the data center |

Therefore, if we look towards the differences, we can say the data center is a broader term when we compare it with the cloud (Quora Web, 2018). However, both of these are closely associated with one another.

Energy consumption issues in Data Centres and Cloud Infrastructures

As we already discussed that a data center is a broader term than cloud computing that has a large collection of servers, power-related devices for an example, NSA data center situated in the USA consume more than 70 MW electricity. If we discuss in detail we can say, nowadays with the increased demand for cloud-based applications by the organizations, many data centers come into existence. In general, a data center with an area of 50000 square feet needs 5 MW electricity. This much electricity is enough for 5000 households per year. All the cooling equipment’s required for the datacentre needs to run round the clock (Slashdot web, 2018).

Therefore, energy and cost minimization is the new requirement for the researchers to develop new technology or algorithms (Ghamkhari et al, 2013).

Data centers and the Challenge in Sustainable Energy

As discussed in the previous section that cloud computing provides demand based solution to end user for their problems and to ensure the reliability and availability of these solutions Cloud Data Centre (CDC) should be functional 24/7. All the activities performed during the various operations generate a large amount of data and that data is stored and processed at the data centers (Dou et al, 2017).

However, the methods of processing, storing and creating the data lead towards energy costs, carbon emissions and issues that disturbs the environment. As data centres consumes numerous amount of power, we are facing the problems and challenges of the sustainable energy economy. With the increase in the demand or cloud-based services, the demand of data centers also got increased. In addition, the demand is increasing day by day in many folds. If demands kept increasing like this, data centers are expected to require 8000 TeraWatt hours (TWh) of energy by 2030 as shown in figure 4.1 (Buyya et al, 2018).

At present, all the data center service providers are looking towards the ways by which carbon footprints of their infrastructure get reduced. All the giants of cloud data center service providers are looking towards using renewable energy sources such that their data centers provide the services with minimal emission of carbon footprints and greenhouse gas emissions (Li et al, 2018).

Considering all this in consideration maintaining a mechanism to provide cooling is required. However, by developing such solutions cost will also increase. One solution to solve this Issue is to use the waste heat energy and make free the cooling units. To do so waste heat locations needs to be find out. For enabling the sustainability in cloud, data centres positions is very critical. Data centres needs to be based on:

- Approachability of available green resources

- Chances for waste heat recovery and

- Positioning of free cooling systems

Thus, all these issues are required to be address for enablement of energy-efficient cloud-based systems.

Energy consumption factors in Data Centre

If we know exactly how the servers of data centres consume the energy than we can take the corrective measures to control it. Servers consume lots of energy in the cloud computing environment and this consumption is dynamic which keeps changing according to server utilization. The consumption also depends upon the type of application or computation is running on the server, like data retrieval applications take less time as compared to data processing applications. All the electrical equipment’s, networking equipment’s also contributed to the energy consumption.

In addition to all these server idle time, energy consumption also contributes to energy consumption but it is very less.

Power supply for UPSs supplied through power conditioning systems. All the UPSs require continuous charging so that that power can supplied to required equipment’s. All these UPSs disseminate electricity at very high voltage to the power distribution units. Another major source of energy usage in the data center is cooling systems. All the cooling systems used to maintain the necessary temperature and humidity. Cooling in the data center is started with CRAH (Computer room air handler).CRAH is used to transfer heat generated by servers to an icy water cooling loop. Moreover, this process requires a large amount of energy. So the efforts must be made towards minimizing all these factors (Fan et al, 2007) (Uchechukwu et al, 2012)

Therefore, if we want to categories the power consumption, it is broadly of 2 types, one is power consumption during idle time and other is power consumption during the processing.

Pt=Pi + Pp

Where Pt=Total power consumption,

Pi=Power consumed in idle time and

Pp=Power consumed during processing

Energy Saving approaches in cloud computing

The performance and energy usage of systems depends upon various parameters. Some basic approaches that used to save energy could be the turn on and off the server as per the requirements, putting servers in sleep mode during idle time, Use of DVFS (Dynamic Voltage/Frequency Scaling) and intelligent use of virtual machines or Docker containers for better resource utilization. Many researchers putting efforts for reduction of energy consumption in cloud-based data centers. Some of the approaches are as follows in table 6.1.

Table 6.1: Energy saving approaches in cloud computing

| Authors | Technique Used | Aim | Approach Applied |

| (Stillwell et al, 2009) | Virtual Machine consolidation and

resource throttling |

Lower energy under some

performance constraints |

Use of Heuristic algorithms |

| (Kusic et al, 2009) | Virtual Machine consolidation and

Server power switching |

Low power under some

performance constraints |

Use of Resource

provisioning framework |

| (Kim et al, 2009) | Leveraging

heterogeneity, DVFS |

Minimum energy under

performance constraints |

Lowest-DVS, Advanced-DVS, Adaptive-DVS |

| (Li et al 2013) | load balancing of

actual resources present across virtual Machines and load migration across virtual machines |

Balanced resource utilization and power saving | An algorithm on Cloud Sim toolkit |

| (Ghribi et al, 2013) | Resource Migration and virtual machine scheduling | Energy saving based upon the load on the system | The algorithm on Java simulator |

| (People et al, 2013) | Workload Scheduling | Power saving and better server utilization | Java-based tool |

| (Murtazaev et al, 2011) | Applies Virtual

consolidation method |

By lowering the number of active server ‘s energy consumption also reduced. | New simulation tool |

| (Lago et al, 2011) | Virtual Machine

Scheduling and Migration |

Virtual Machines in non

federated homogeneous and heterogeneous data centers, also, improve power consumption in loads |

The algorithm on Cloud Sim toolkit |

| (Belonglazov et al, 2010) | Effective dynamic

relocation of virtual machines, DVFS |

Minimize power

consumption, satisfy performance requirements |

Cloud Sim toolkit |

| (Buyya et al, 2010) | Resource allocation and

scheduling adaptive utilization |

Qos,

Minimize energy consumption, Green resource allocator |

Cloud Sim toolkit |

| (Buyya et al 2018) | Thermal-aware Scheduling, Capacity Planning, Renewable Energy, Waste Heat Utilization, energy-aware resource management

technique |

a decrease in carbon footprints of cloud data centers, energy efficiency

of power infrastructure and cooling devices by integrating them |

Conceptual model |

Conclusion

Nowadays cloud computing is a driving force which defines the new way in the IT industry and almost every organization is moving towards it due to its benefits. With the increase in demand cloud systems posses large IT resources, and to run all these resources lots of power and energy is required.

Due to this, it becomes an issue for ecological and economic reasons. In the current paper, the need for energy conservation in cloud computing is investigated and we have identified the issues regarding the cloud computing environment that are related to the data centers. The solution regarding the reduction of energy and power for cloud computing also investigated in the paper. It has been observed that there are some components in the architecture of cloud computing that leads to energy saving. When we talk about the hardware, CPU can lead towards more energy saving than other components like hardware (server and nodes) and memory. In addition to these several solutions regarding the problem also studied, that provides better management of power consumption in cloud computing. All the approaches generally consider few factors like Server scheduling, QoS (Quality of service), network topology, workload scheduling, virtual machine migration, waste heat utilization, load balancing etc. As a future work, all the solutions provided by the researchers can be compared and a new algorithm can be developed that provide the maximum energy efficiency and minimization in CO 2 emission, without reduction the QoS in cloud computing.

References

Andy H.(2008), Green Computing, Communication of the ACM 51.10, pp.11-13

Awada Uchechukwu, keqiu Li, Yanming Shen (2012), Improving Cloud Computing Energy Efficiency, IEEE Asia Pacific Cloud Computing Congress (APCloudCC) ,pp 53-58

Beloglazov, A., Buyya, R. (2010), Energy Efficient Resource Management inVirtualized Cloud Data Centers, 10th IEEE/ACM Inter. Conf. on Cluster, Cloud and Grid Computing. CCGRID’10,

Buyya Rajkumar and Singh Gill Sukhpal, (2018), Sustainable Cloud Computing: Foundations and Future Directions, Business Technology & Digital Transformation Strategies, Cutter Consortium, Vol. 21, no. 6, Pages 1-9

Buyya, R., Beloglazov, A., Abawajy, J., (2010), Energy-Efficient management of data center resources for cloud computing, A vision, architectural elements, and open challenges,” Proc. of Inter. Conf. on Parallel and Distributed Proc. Tec. and Appl.

Clark, C., Fraser, K., Hand, S., Hansen, J.G., Jul, E., Limpach, C., Pratt, I., and Warfield (2005), Live migration of virtual machines, Proc of the 2nd Conf. on Sym on Networked Sys Design & Implementation, Berkeley, CA, USA, USENIX Association. pp. 273-286

Damien B., Michael M., Georges D.-C., Jean-Marc P., and Ivona B. (2012), Energy-efficient and SLA-aware management of IaaS clouds, Proc. of 3rd International Conference on Future Energy Systems: Where Energy, Computing and Communication Meet, Madrid”, Spain.

do Lago, D. G., Edmundo, R., Madeira, M., Bittencourt, F. (2011), Power Aware Virtual Machine Scheduling on Clouds Using Active Cooling Control and DVFS, MGC’11 Proc. of 9th Inter. Workshop on Middleware for Grids, Clouds and e-Science, pp. 2

Fan X., Weber W.D., and Barroso L. A. (2007),Power provisioning for a warehouse-sized computer, Proc of 34th International Symposium on Computer Architecture (ISCA 2007), San Diego, California, USA, pp. 13-23

Ghamkhari M. and Mohsenian-Rad H. (2013), Energy and Performance Management of Green Data Centres: A Profit Maximization Approach, IEEE Transactions on Smart Grid, Vol. 4, No. 2, pp. 1017-1025,

Ghribi, C., Hadji, M., Zeghlache, D.(2013), Energy Efficient VM Scheduling for Cloud Data Centers: Exact Allocation and migration algorithms, 13th IEEE/ACM Inter. Sym. on Cluster, Cloud, and Grid Computing, pp. 671-678

Hamilton (2009), Cooperative Expendable Micro-Slice Servers (CEMS): Low Cost, Low Power Servers for Internet-Scale Services, Proc. 4th Biennial Conf. Innovative Data Systems Research (CIDR), Asilomar, CA, USA

http://slashdot.org/topic/datacenter/new-york-timestakes-aim-at-datacenters.

https://www.cisco.com/c/en_uk/solutions/data-center-virtualization/what-is-a-data-center.html.

https://www.quora.com/What-is-the-difference-between-data-center-and-cloud.

Hui Dou, Yong Qi, Wei Wei, and Houbing Song (2017), Carbon-Aware Electricity Cost Minimization for Sustainable Datacenters,IEEE Transactions on Sustainable Computing, vol. 2, no. 2, pp. 211-223

Jordi Toress (2018)., Green Computing: The next wave in computing, In Ed. UPC Technical University of Catalonia

Kim, Kyong Hoon, Anton Beloglazov, and Rajkumar Buyya (2009), Power-aware provisioning of cloud resources for real-time services, Proceedings of the 7th International Workshop on Middleware for Grids, Clouds, and e-Science. ACM

Koomey J., “Estimating Total Power Consumption by Server in the U.S and the World”,

http://enterprise.amd.com/Downloads/svrpwrusecompletefinal.pdf

Kotas C., Naughton T. and Imam N. (2018), A comparison of Amazon Web Services and Microsoft Azure cloud platforms for high performance computing, Proc IEEE International Conference on Consumer Electronics (ICCE), Las Vegas, NV, pp. 1-4.

Kumar Yadav, Anuj and Garg, M.L. and Ritika, Dr., An Efficient Approach for Security in Cloud Computing (January 14, 2019). International Journal of Advanced Studies of Scientific Research, Volume 3, Issue 10, 2018. Available

at SSRN: https://ssrn.com/abstract=3315609

Kusic, Dara, et al(2009), Power and performance management of virtualized computing environments via look ahead control, Cluster computing 12.1

Li, H., Wang, J., Peng, J., Wang, J., Liu, T. (2013), Energy-aware scheduling scheme using workload-aware consolidation technique in cloud data centers, Communications, vol. 10, no. 12, pp.114-124

Markandey A., Dhamdhere P. and Gajmal Y. (2018),Data Access Security in Cloud Computing: A Review, 2018 International Conference on Computing, Power and Communication Technologies (GUCON), Greater Noida, Uttar Pradesh, India, 2018, pp. 633-636

Mell, Peter, and Timothy Grance (2011), The NIST definition of cloud computing (draft). NIST special publication 800.145

Murtazaev, A., Oh, S., (2011), Sercon: Server consolidation algorithm using live migration of virtual machines for green computing, IETE Tec. Rev., vol. 28, no. 3, pp. 212-231

Naseer Qureshi K., Bashir F. and Iqbal S . (2018), Cloud Computing Model for Vehicular Ad hoc Networks,2018 IEEE 7th International Conference on Cloud Networking (CloudNet), Tokyo,pp.1-3

Peoples, C., Parr, G. McClean, S., Morrow, P., Scotney, B., (2013), Energy Aware Scheduling across ‘Green’ Cloud Data Centres, IEEE Inter. Sym. On Integrated Net. Manage. pp. 876 -879

Stillwell, Mark, et al (2009), Resource allocation using virtual clusters, Cluster Computing and the Grid, CCGRID’09. 9th IEEE/ACM International Symposium on IEEE

Tianfield H. (2018), Towards Edge-Cloud Computing, Proc. of IEEE International Conference on Big Data (Big Data), Seattle, WA, USA, pp. 4883-4885.

Xiang Li, Xiaohong Jiang, Peter Garraghan, and Zhaohui Wu, (2018),Holistic energy and failure aware workload scheduling in Cloud datacentres, Future Generation Computer Systems, vol. 78, pp. 887-900.