1Research Scholar, Department of Computer Science and Engineering, Visvesvaraya a

Technological University, Belgaum, Karnataka, India.

2Department of Computer Science and Engineering, YSR Engineering College

of Yogi Vemana University, Proddatur, Andhra Pradesh, India.

3Department of Information Science and Engineering, NMAM Institute

of Technology, Nitte, Karnataka, India.

Corresponding author email: ramamohanchinnem@gmail.com

Article Publishing History

Received: 18/09/2022

Accepted After Revision: 25/11/2022

Multifocus image fusion employs fusion principles to integrate many focused images of the same scene. All-in-focus images are instructive and valuable for visual perception. Maintaining shift-invariant and directional selectivity in a fused image is crucial. Traditional wavelet-based fusion methods are hindered their performance due to a lack of invariant shift and reduced directionality. In this paper, a classical multifocus hybrid wavelet-based approach with principal component analysis (PCA) is proposed. At the first level of decomposition, stationary wavelet transformation (SWT) is used to perform the fusion process with the given source images. In the next level, approximation coefficients of source images are selected for decomposition as well as fusion using dual-tree complex wavelet transformation (DTCWT) and finally, PCA is applied to generate a final fused image. Analysis of the proposed method has been accomplished by evaluating various objective parameters.

Directional Selectivity, Fusion Rules, Image Quality Metrics,

Invariant Shift, Principal Component Analysis

Mohan C. R, Kiran S, Vasudeva. Quality Enhancement of Multifocus & Medical Images Using Hybrid Wavelets Based Fusion for Bioengineering Applications. Biosc.Biotech.Res.Comm. 2022;15(4).

Mohan C. R, Kiran S, Vasudeva. Quality Enhancement of Multifocus & Medical Images Using Hybrid

Wavelets Based Fusion for Bioengineering Applications. Biosc.Biotech.Res.Comm. 2022;15(4). Available from: <a href=”https://bit.ly/3fBMTWF“>https://bit.ly/3fBMTWF</a>

Copyright © This is an Open Access Article distributed under the Terms of the Creative Commons Attribution License (CC-BY). https://creativecommons.org/licenses/by/4.0/, which permits unrestricted use distribution and reproduction in any medium, provided the original author and sources are credited.

INTRODUCTION

The crucial stage involved in various applications of image processing is an acquisition of images. The limitation involved in capturing an image is to focus all the objects. The optical lenses used in the acquisition of images have limited depth of field (DOF). The distance from the objects appeared in the image to optical lens usually creates multifocus images. To improve the DOF and to focus all the objects of the image and to improve their sharpness, an inexpensive methodology/algorithm needs to be developed.

The developed technique should appreciably improve the focus of all objects by integrating the multiple images captured using different focal planes. Image fusion is a low-cost methodology used by various researchers in the last decade. The main objective of the different algorithms used in the image fusion process is to combine two or more images acquired in different focal planes. The obtained fused image should invariably show superior performance in the detection of objects than the objects that appeared in the multifocus images [Chai and 2011; Shah and 2013; Zhang et al. 2014].

Since the social optical arrangement handles multiresolution knowledge under the transform field method computing principle, transform field algorithms, or more precisely, multiresolution algorithms, are superior. Numerous multiresolution approaches, including pyramid approaches, have been advanced in the literature [Petrovic and Xydeas 2004; Wahyuni and Sabre 2016], including distinct wavelet transformation [Wang and 2003], stationary transform wavelet [Borwonwatanadelok and 2009; Li and 2011; Sharma and Gulati 2017], multiresolution singular value decomposition [Naidu 2011], DCHWT, lifting schemes of WT, DDDWT, and ST [Li and 2012; Shreyamsha Kumar 2013; Zou and 2013; Liu and 2014; Pujar and Itkarkar 2016].

In pyramid domain orientation blocking effect is a major problem, which causes spatial distortion in fused images. The traditional wavelet-based algorithms cause quality distortions due to ringing artifacts in the image. The DTCWT [Yang et al. 2014; Radha and Babu 2019] and SWT [Aymaz Samet and Köse 2019] are two important wavelet transformation methods precise elimination of major problems like sensitivity and invariance and also maintains better selectivity, well prediction of image details in fusion process. Being implementation of these transformations little bit complicated due to their satisfaction conditions. The DTCWT is available with various levels and methods, which indicates its superiority in the transformation-based algorithms. With the above reasons the proposed methodology is used to choose these two wavelets in fusion process.

Many methods of multifocus image fusion have been proposed over the years. An efficient image fusion model using improved adaptive PCNN was proposed, for instance, by (Panigrahy and 2020) for the NSCT domain. The suggested method for image fusion makes use of the sub-bands of the source images obtained by the NSCT algorithm. This new FDFM algorithm is used to determine the adaptive linking strength. Based on their categorization of region-based fusion methods, (Meher et al. 2019) offered an overview of the state-of-the-art in this field.

The importance of fusion objective assessment indicators for comparing the aforementioned available techniques is highlighted. To better image processing, (He et al. 2020) formulated a multi-focus image fusion method. For the purpose of estimating the impact of fusion rules, multi-focus image fusion has used the cascade-forest. In a recent study (Aymaz and Köse 2019), the authors developed a novel multi-focus image fusion strategy that makes use of a super-resolution hybrid technique.

With the use of a convolutional neural network, (Wang et al. 2019) suggested a new method of multifocus image fusion for the DWT field. The advantages of both spatial and transform domain techniques are merged in the CNN algorithm. The CNN is used to enhance features and construct separate decision maps for different frequency subbands in place of traditional image blocks or source pictures. Furthermore, the CNN method’s use of an adaptive fusion rule is an added bonus.

Laplacian energy and variance were two of the most important measures derived by (Amin-Naji and Aghagolzadeh 2018] . (Aymaz and 2020) present a novel method for multi-focus image fusion, use focus measures to assess the degree to which the source blocks and the artificially blurred blocks correlate in the DCT domain. Super-resolution techniques, namely the SWT with the dmey (Discrete Meyer) filter added for decomposition, are of interest for improving contrast. Using a gradient-based method and a new fusion rule, a better final image can be achieved.

To separate out the high and low frequency components, by (Li and 2019) to extract high and low frequency coefficients. Additionally, deep convolution neural networks are utilized to build a high-quality fused image by directly mapping between the learning of high-frequency and low-frequency components of the source images. The encircling technique was used by (Nejati et al. 2017) to offer a novel focus metric based on the surface area of regions. It is shown that using this metric, fusion methods can distinguish between hazy regions.

Using MST and CSR, (Zhang 2021) suggested a new fusion approach that addresses both MST and SR fundamental flaws. The low-frequency and detailed directional components of each source image are initially extracted using MST. After that, CSR is employed for the low-pass fusion, while the max-absolute condition was used for high-pass fusion. A classical MIF system that uses the qshiftN-DTCWT and MPCA in the LP domain was proposed by (Mohan et al. 2022) to extract the focused image from a set of input images.

The fused image has the potential to enhance sharpness, directional accuracy, and shift invariance. Using the CRF-minimization, labels, and ICA-transform coefficients, (Bouzos and 2022) proposed the CRF-Guided fusion, which guides high-frequency fusion after low-frequency fusion. Through coefficient shrinkage, CRF-Guided fusion makes it possible to perform image denoising while the fusion process occurs. (Zhang and Feng 2022) use the CAOL framework to propose a method for fusing images from several focal images. This technique uses a sharp BPEG-M to learn several convolution filters, solve memory issues, and facilitate parallel computation.

This research contributes new knowledge by applying CAOL-learned filters to three distinct methods of multifocus image fusion. (Ma et al. 2022) proposed a MsGAN (i.e., a multi-scale generative adversarial network) for end-to-end MIF, which maximizes the utility of image features through the fusion of multi-scale decomposition and CNN. The outcome of this method has produced a final fused image with a sharp focus. The rest of the paper follows this structure; Section 2 describes the material and methods. The result and discussions are presented in Section 3. Finally, conclusions are given in Section 4.

MATERIAL AND METHODS

Stationary Wavelet Transform (SWT): Nason and Silverman introduced the SWT category of wavelet transforms, which has special shift-invariant and redundancy properties (Nason and Silverman 1995). Shift-invariance plays a vital role in denoising and image processing. SWT changes the filter component by adding zeros for each level of decomposition through the use of upsampling. As a result, SWT maintains the initial input signal. The redundancy of SWT increases the speed of processing. Multifocus input images are decomposed into four sub-bands by exploiting SWT. SWT crates four sub-bands, such as LL, LH, HL, and HH, for the original multifocus image. LL is the low-frequency sub-band that consists of approximation information of the input source image. The high-frequency sub-bands LH, HL, and HH, comprise the input source image’s detail coefficients (Aymaz and Köse 2019).

Dual-Tree Complex Wavelet Transform: Wavelets are superior concerning transformation, which is represented by various dimensions. These dimensions have issues with directional sensitivity and shift invariants. Since DTCWT is an advanced wavelet transformation version, it also suffers from invariance and sensitivity problems. The DTCWT is implemented internally with filter blanks, which distributes the image information into two parts, i.e., real and imaginary. With the reflection of filter blanks, the fused images of DTCWT acquire smooth and continuous edges. The advantages of DTCWT include directional sensitivity and scaling of real and imaginary parts at various angles. The detailed information is preserved due to the representation of six sub-bands. However, a few problems are resolved in the DTCWT filter approach; it minimizes issues like frequency responses and energy preservation.

Principal Component Analysis: In most cases, the PCA approach establishes correlations between previously unrelated variables. This technique helps examine data and select the most relevant aspects of a dataset. Following image fusion, the PCA [Suhail et al. 2014; Naidu and Raol 2008] method is used to find the most optimal value for the fused image. Next, multiply these by the corresponding fused image to get the all-in-focus image. Information is converted into eigenspace as part of the principal component analysis process. Data variance increases while the covariance decreases by keeping the components with the biggest eigenvector. In particular, this approach eliminates extraneous information and extracts the most crucial parts of the fused image. In addition, PCA gives weight to elements resilient to noise and impacts. In this way, the PCA reduces spatial blurring and distortions. Our earlier studies [Mohan and 2020; Mohan et al. 2020] detail the PCA algorithm’s phases.

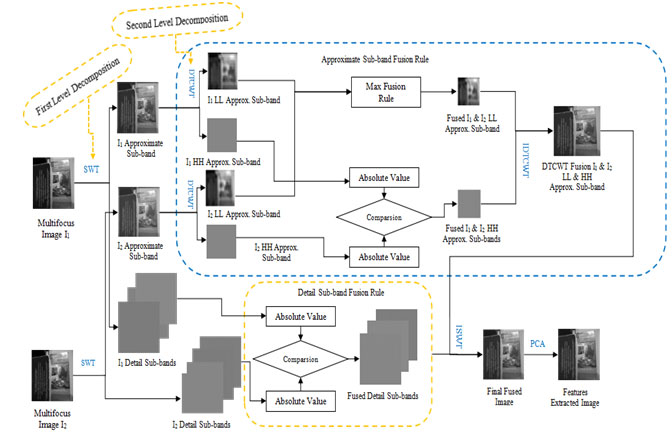

Flow Diagram of Proposed Approach: The flow diagram of the proposed work is shown in Figure 1, which consists of two layered processes such as hybrid wavelet-based image fusion (i.e., SWT and DTCWT) and PCA. In the first stage decomposition and fusion have been done by using hybrid wavelets i.e., a combination of SWT and DTCWT which eliminates spatial distortions and blurring artifacts. In the second stage, to extract information efficiently, the PCA algorithm is implemented. The description of the process steps is given below.

Read multi-focus images. Read the images with multiple focuses. The second step is to divide the input multi-focus images into approximate and detail sub-bands using SWT to perform the initial level of decomposition. Take advantage of DCTWT for approximating sub-bands that decompose image data into LL and HH components at the second level of decomposition. Apply maximum fusion to the DCTWT-obtained approximate sub-bands of the LL components, and the resulting sub-band will contain all of the LL components. After comparing the intensities of these two sub-bands, the remaining HH approximate sub-bands of DCTWT are determined by taking the sub-band with the highest intensity into account. Use IDTCWT to combine the approximate subbands of LL and HH to form a fused subband. On the other hand, the first-level subbands are evaluated independently to determine which intensity is the highest before settling on a final subband. Create the final fused image using inverse SWT for the DCTWT fused image and the final detailed sub-band. Use principal component analysis to derive features from the fused image.

Performance analysis of proposed method: The proposed method is tested using two-fold performance measures such as subjective assessment and objective evaluation which is further compared with the state-of-the-art technologically advanced methods reported recently. The objective analysis is based on quantitative methods of comparing the values of the image fusion measures. It compares the fused image to the input images in terms of spectral and spatial similarity. Quantitative analysis can be done in two ways: with or without a reference image (Jagalingam and Hegde 2015).

Evaluation of quality measures: In this paper, eleven popular metrics, such as QAB/F(Total Fusion Performance), E(F) (Entropy), QE (Edge-dependent Fusion Quality),

Figure 1: The flow diagram of the proposed hybrid wavelet and PCA-based image fusion algorithm

AGF (Average Gradient), GM (Gray Mean Value), CC (Correlation Coefficient), SSIM (Structural Similarity), SD (Standard Deviation), EI (Edge Intensity), ID (Image Definition), and Q0 (Universal Image Quality Index) are employed to quantitatively evaluate the performances of different fusion methods (Wang et al. 2004; Li and 2011; Wang and Bovik 2002; Yang 2014).

RESULTS AND DISCUSSION

In this paper, a hybrid wavelet (i.e., SWT and DTCWT) is utilized to perform image fusion and a PCA is proposed to evaluate the image features. Quality measures such as QAB/F, SSIM, E(F), AGF, EI, CC, Q0, QE, SD, GM, and ID were employed to assess the performance of the proposed algorithm. The obtained quality measures of the fused image are compared with state-of-the-art existing methods. The resemblance and robustness of the fused pictures against distortions are measured using these criteria.

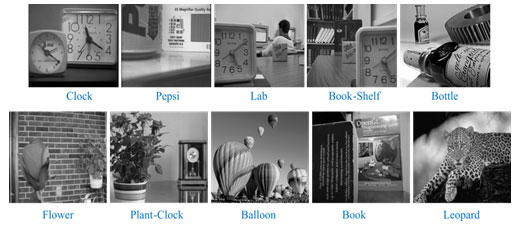

The choice of multifocus source images is very particular in the case of multi-focus image fusion. Experimental results are available on many multi-focus images for different areas like biomedical, remote sensing, etc. with different data sets. Clock, Desk/Book-Shelf, Book, Flower, Lab, Leopard, Pepsi, Craft, Balloon, Calendar, Wine/Bottle, Plant-Clock/Corner, Grass, Medical, and Remote Sensing images are utilized in this work and the obtained results are compared with the existing methodologies reported in the literature (Panigrahy and 2020; Zhang 2021).

Comparison for multi-focus clock image: Figure 2 depicts the assessment of the first multifocus image using the clock. The images shown in Figures 2(a), (b), and (c) are the original, the left, and the right-focused images, correspondingly. Figure 2(d) depicts the final, sharp image that results from using the method. In order to evaluate the efficacy of the proposed approach, several metrics are computed, including QAB/F, SSIM, E(F), AGF, SF, CC, and QE. Finally, the effectiveness of the proposed strategy is evaluated in light of other approaches already present in the literature. In Table 1, we see how the quality metrics for the proposed hybrid wavelet approach compare to those for (Panigrahy and 2020). The comparison between the suggested hybrid strategy and the stated methodology demonstrates its superiority, with the top-performing methods highlighted in bold.

Figure 2: (Clock): a. Original Image; b, c. Multifocus input images; d. Proposed fusion.

Table 1. Comparison of quality metrics of the proposed scheme with ‘(Panigrahy and 2020)’

| Evaluation Metric | Chinmaya Panigrahy et al. 2020 | Proposed |

| E(F) | 7.3854 | 7.4042 |

| AGF | 6.0719 | 5.2238 |

| CC | 0.9808 | 0.9812 |

| QAB / F | 0.8968 | 0.9996 |

| SSIM | 0.9031 | 0.9857 |

| QE | 0.8538 | 0.9058 |

Comparison for multi-focus desk image: The assessment of the second multifocus image is the desk, which is displayed in figure 3, which comprises the original, multifocus, and result image after the image fusion approach. The following parameters—SSIM, QE, CC, AGF, QAB/F, and, E(F)—are computed to evaluate the proposed methodology’s performance. In the end, the performance of the recommended strategy is assessed and contrasted with that of other methods previously published in scholarly research. Table 2 presents the results of a comparison of the quality indicators used by each of these methods. According to the study that has been published (Panigrahy and 2020), the hybrid wavelet strategy that was proposed was found to be more effective than those other approaches. The results of the methods that were found to be the most effective are highlighted in bold.

Figure 3: (Desk): a. Original Image; b, c. Multifocus input images; d. Proposed fusion.

Table 2. Quantitative analysis of proposed and existing image fusion scheme (Panigrahy and 2020).

| Evaluation Metric | Chinmaya Panigrahy et al.2020 | Proposed |

| E(F) | 7.346 | 7.3550 |

| AGF | 8.215 | 8.2089 |

| CC | 0.9644 | 0.9627 |

| QAB / F | 0.8958 | 0.9996 |

| SSIM | 0.8693 | 0.9887 |

| QE | 0.8669 | 0.9280 |

Comparison for multi-focus book image: Figure 4 is an illustration of the assessment of the third multifocus image, which is the book. The multifocus image, as well as the original image, are depicted in Figures 4 (a), (b), and (c), respectively. After the procedure has been successfully applied, the process of creating an entirely focused image is depicted in Figure 4(d), which may be found below. The following parameters—QE, QAB/F, CC, AGF, SSIM, and E(F)—are computed so that the suggested methodology’s performance can be evaluated. In the end, the performance of the recommended strategy is assessed and contrasted with that of other methods earlier published in scholarly research. Table 3 of the study contains the comparison’s findings, which can be viewed here. The proposed strategy appears to be more effective than the study described by (Panigrahy and 2020), and the results that appear to be the most favorable are highlighted in bold.

Figure 4: (Book): a. Original Image; b, c. Multifocus input images; d. Proposed fusion.

Table 3. Quantitative analysis of proposed and existing image fusion scheme (Panigrahy and 2020).

| Evaluation Metric | Chinmaya Panigrahy et al.2020 | Proposed |

| E(F) | 7.2957 | 7.3999 |

| AGF | 13.7059 | 12.3623 |

| CC | 0.9825 | 0.9898 |

| QAB / F | 0.9145 | 0.9996 |

| SSIM | 0.9539 | 0.9227 |

| QE | 0.8838 | 0.9195 |

Comparison of multi-focus flower image: Figure 5 depicts the evaluation of the fourth multifocus image, a flower. The original image is shown in Figure 5(a). Multi-focus examples of flowers are shown in Figures 5(b) and (c), where the left and right focuses are, respectively. Figure 5(d) depicts the resultant sharp image that is achieved by using the method. The effectiveness of the proposed method is evaluated by calculating QE, SSIM, QAB/F, CC, AGF, and E(F). Finally, the proposed strategy is considered in light of various approaches already in the literature. Table 4 displays the report’s comparison results. Table data on quality measures demonstrate that the proposed method outperforms the approach proposed by (Panigrahy and 2020).

Figure 5: (Flower): a. Original Image; b, c. Multifocus input images; d. Proposed fusion

Table 4. Quantitative analysis of proposed and existing image fusion scheme (Panigrahy and 2020)

| Evaluation Metric | Chinmaya Panigrahy et al.2020 | Proposed Method |

| E(F) | 7.2212 | 7.1982 |

| AGF | 14.3156 | 14.3329 |

| CC | 0.9687 | 0.96529 |

| QAB / F | 0.8869 | 0.9996 |

| SSIM | 0.9477 | 0.9890 |

| QE | 0.8617 | 0.9066 |

Comparison for multi-focus lab image: Figure 6 illustrates the assessment of the fifth multifocus image, i.e., lab. The following parameters—QE, SSIM, QAB/F, CC, AGF, and E(F)—are computed so that the suggested methodology’s performance can be evaluated. In the end, the performance of the recommended strategy is assessed and contrasted with that of other methods previously published in scholarly research. Table 5 contains an analysis of the findings from the comparison. The results shown by the proposed approach are more effective than the methodologies reported by (Panigrahy and 2020), and the most successful outcomes of the methods are shown in bold.

Figure 6: (Lab): a. Original Image; b, c. Multifocus input images; d. Proposed fusion.

Table 5. Quantitative analysis of proposed and existing image fusion scheme (Panigrahy and 2020).

| Evaluation Metric | Chinmaya Panigrahy et al.2020 | Proposed |

| E(F) | 7.1178 | 7.1292 |

| AGF | 6.6468 | 6.6797 |

| CC | 0.9791 | 0.9774 |

| QAB / F | 0.8996 | 0.9996 |

| SSIM | 0.9122 | 0.9913 |

| QE | 0.8675 | 0.9258 |

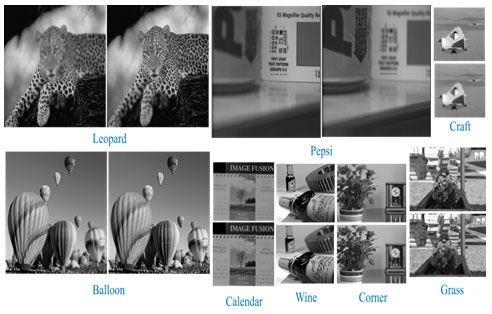

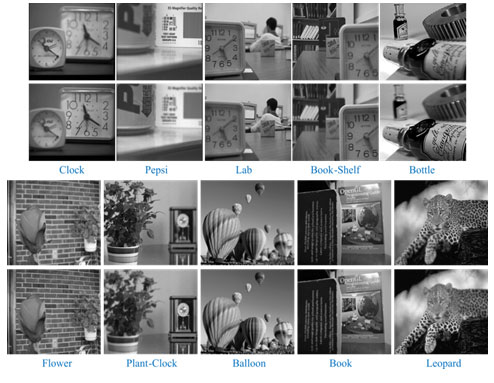

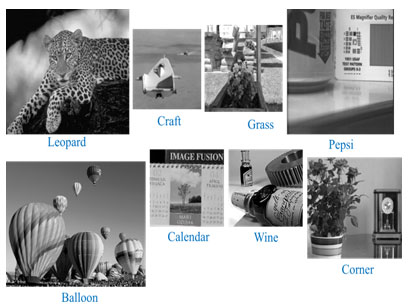

Analysis of a few more image pairs: No single strategy can guarantee optimal results regarding subjective and objective metrics for any image set. In light of this, in Figures 7 and 8, the current research presented several additional pairs of multi-focus images based on the current method. Figures 9 and 10 show the resulting fused images from these multi-focus images. Results from applying the suggested fusion method to the tested image pairs are presented in Figures 9 and 10. Tables 6 and 7 indicate the average objective evaluation of multiple processes for the image pairs depicted in Figures 7 and 8. Tables 6 and 7 provide the comparison outcomes. The best results of the various methods are emphasized in bold. The proposed method is superior to those described in the literature (Panigrahy and 2020; Zhang 2021).

Figure 7: Some multifocus image pairs.

Figure 8: Some multi-focus image pairs.

Figure 9: Fused Images for the multi-focus image pairs are shown in Figure 7

Table 6. Comparative Analysis of quantitative measures (average value) using ‘(Panigrahy and 2020)’

| Evaluation Metric | Chinmaya Panigrahy et al.2020 | Proposed |

| E(F) | 7.2766 | 7.2530 |

| AGF | 15.1203 | 16.0783 |

| CC | 0.9736 | 0.97029 |

| QAB / F | 0.898 | 0.9635 |

| SSIM | 0.872 | 0.9756 |

| QE | 0.8336 | 0.9102 |

Figure 10: Fused images for the multi-focus image pairs are shown in Figure 8.

Table 7. Comparative analysis of quantitative measures (average) using ‘(Zhang 2021)’.

| Fusion Methods | AG | EI | GM | SD | ID | QAB/F | Q0 | QE |

| DWT – CSR | 7.7793 | 78.6239 | 104.4005 | 52.3230 | 9.8798 | 0.7360 | 0.8168 | 0.6941 |

| DTCWT – CSR | 7.6828 | 77.7976 | 103.8605 | 52.0522 | 9.7117 | 0.7745 | 0.8152 | 0.7433 |

| CVT – CSR | 7.6924 | 77.8838 | 104.4969 | 52.1355 | 9.7320 | 0.7420 | 0.8051 | 0.7120 |

| NSCT – CSR | 7.6807 | 77.7429 | 104.6126 | 52.2404 | 9.7074 | 0.7571 | 0.8327 | 0.7186 |

| Proposed | 12.5994 | 67.5573 | 105.0181 | 52.8417 | 8.6535 | 0.9997 | 0.9232 | 0.9233 |

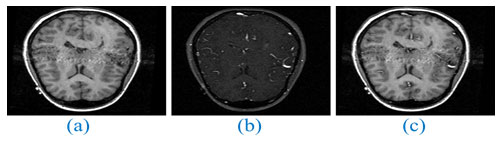

Analysis of remote sensing and a medical image pair: Finally, remote sensing and medical image pairs test the proposed methodology. With these image pairs as a test, it is possible to determine whether the proposed method performs well in various contexts. Tables 8 and 9 show the results achieved using multiple approaches for the “Remote sensing” and “Medical” picture pairings, respectively. In contrast, Figures 11 and 12 show the fusion results produced using various methods for the “remote sensing” and “medical” image pairs, respectively.

The following parameters are calculated to assess how well the suggested approach performs: QE, SSIM, QAB/F, CC, AGF, and E(F). The performance of the proposed strategy is then contrasted with that of other approaches previously reported in the literature. The evaluation findings are displayed in tables 8 and 9 of the study. According to the tables, the suggested methodology demonstrates its effectiveness for (Panigrahy and 2020), and the highest-quality metrics are denoted in bold.

Figure 11: (Medical): a, b. source images; c. Proposed fusion.

Table 8. Comparative analysis of Quantitative measures (Average) with ‘(Panigrahy and 2020)’.

| Evaluation Metric | Chinmaya Panigrahy et al.2020 | Proposed |

| E(F) | 6.4646 | 6.4741 |

| AGF | 14.6353 | 14.4300 |

| CC | 0.9255 | 0.9204 |

| QAB / F | 0.8704 | 0.8636 |

| SSIM | 0.7027 | 0.9632 |

| QE | 0.813 | 0.8958 |

Figure 12: (Remote Sensing): a. Original Image; b, c. Multifocus input images; d. Proposed fusion.

Table 9. Comparative Analysis of quantitative measures (average) with ‘(Panigrahy and 2020)’.

| Evaluation Metric | Chinmaya Panigrahy et al.2020 | Proposed |

| E(F) | 7.195 | 7.1433 |

| AGF | 12.6765 | 12.4640 |

| CC | 0.5219 | 0.4751 |

| QAB / F | 0.8229 | 0.9996 |

| SSIM | 0.6679 | 0.8068 |

| QE | 0.6085 | 0.7351 |

CONCLUSION

Traditional wavelets based fusion algorithms are degraded due to edge loss and spatial distortions. The proposed methodology using SWT-DTCWT-based hybrid wavelet with PCA overcomes these severe limitations. The significant objectives of the image fusion process are to achieve better visual quality, the extraction of relevant information from the source images, and the preservation of edges and important regions with acceptable quality. The proposed work is validated with a wide range of data sets using statistical measures like AG, SD, SSIM, QAB/F, etc. It is evident from the results that the proposed method produces better visual perception with less distortion.

ACKNOWLEDGEMENTS

Funding: This research did not receive any specific grant from funding agencies in the public.

Conflict of Interests: The authors declare no conflict of interest.

Data Availability Statement: Data can be available on request.

RFERENCES

Amin-Naji M and Aghagolzadeh A (2018). Multi-Focus Image Fusion in DCT Domain using Variance and Energy of Laplacian and Correlation Coefficient for Visual Sensor Networks, Journal of AI and Data Mining, 6(2), 233-250, DOI: 10.22044/JADM.2017.5169.1624.

Aymaz S and Köse C (2019). A novel image decomposition-based hybrid technique with super-resolution method for multi-focus image fusion, Information Fusion, 45, 113–127, DOI: https://doi.org/10.1016/j.inffus.2018.01.015.

Aymaz S, Köse C and Aymaz Ş (2020). Multi-focus image fusion for different datasets with super-resolution using gradient-based new fusion rule, Multimedia Tools and Applications, 79, 13311–13350, DOI: https://doi.org/10.1007/s11042-020-08670-7.

Borwonwatanadelok P, Rattanapitak W and Udomhunsakul S (2009). Multi-Focus Image Fusion based on Stationary Wavelet Transform and extended Spatial Frequency Measurement, 2009 International Conference on Electronic Computer Technology, IEEE, 77-81, DOI: 10.1109/ICECT.2009.94.

Bouzos O, Andreadis I and Mitianoudis N (2022). Conditional Random Field-Guided Multi-Focus Image Fusion. J. Imaging, 8(9), 240, https://doi.org/10.3390/ jimaging8090240

Chai Y, Li H and Li Z (2011). Multifocus image fusion scheme using focused region detection and multiresolution, Optics Communications, 284, 4376-4389, DOI: 10.1016/j.optcom.2011.05.046.

He L, Yang X, Lu L et al., (2020). A novel multi-focus image fusion method for improving imaging systems by using cascade-forest model, EURASIP Journal on Image and Video Processing, 5, DOI: https://doi.org/10.1186/s13640-020-0494-8.

Jagalingam P and Hegde AV (2015). A Review of Quality Metrics for Fused Image. Aquatic Procedia, Elsevier, 4, 133-142, DOI: 10.1016/j.aqpro.2015.02.019.

Li H, Wei S and Chai Y (2012). Multifocus image fusion scheme based on feature contrast in the lifting stationary wavelet domain, EURASIP Journal on Advances in Signal Processing, 39, DOI: https://doi.org/10.1186/1687-6180-2012-39.

Li J, Yuan G and Fan H (2019). Multifocus Image Fusion Using Wavelet-Domain-Based Deep CNN, Computational Intelligence and Neuroscience, DOI: https://doi.org/10.1155/2019/4179397.

Li S, Yang B and Hu J (2011). Performance comparison of different multi-resolution transforms for image fusion, Information Fusion. 12(2), 74-84, DOI: 10.1016/j.inffus.2010.03.002.

Liu J, Yang J and Li B (2014). Multi-focus Image Fusion by SML in the Shearlet Subbands, TELKOMNIKA Indonesian Journal of Electrical Engineering, 12(1), 618 – 626, DOI: http://dx.doi.org/10.11591/telkomnika.v12i1.3365.

Ma X, Wang Z, Hu S et al., (2022). Multi-Focus Image Fusion Based on Multi-Scale Generative Adversarial Network, Entropy, 24(5), 582, https://doi.org/10.3390/ e24050582

Meher B, Agrawal S, Panda R et al., (2019). A survey on region based image fusion methods, Information Fusion, 48, 119-132, DOI: https://doi.org/10.1016/j.inffus.2018.07.010.

Mohan CR, Chouhan K, Rout RK et al., (2022). Improved Procedure for Multi-Focus Images Using Image Fusion with qshiftN DTCWT and MPCA in Laplacian Pyramid Domain, Appl. Sci., 12(19), 9495, https://doi.org/ 10.3390/app12199495.

Mohan CR, Kiran S and Kumar AA (2020). Efficiency of Image Fusion Technique Using DCT-FP with Modified PCA for Biomedical Images, Biosc.Biotech.Res.Comm., 13(3), 1080-1087, DOI: http://dx.doi.org/10.21786/bbrc/13.3/14.

Mohan CR, Kiran S, Vasudeva et al., (2020). Multi-Focus Image Fusion Method with QshiftN-DTCWT and Modified PCA in Frequency Partition Domain, ICTACT Journal on Image and Video Processing, 11(1), 2275-2282, DOI: 10.21917/ijivp.2020.0323.

Naidu VPS (2011). Image Fusion Technique using Multi-resolution Singular Value Decomposition, Defence Science Journal, 61(5), 479-484, DOI: 10.14429/dsj.61.705.

Naidu VPS and Raol JR (2008). Pixel-level image fusion using wavelets and principal component analysis, Defence Science Journal, 58(3), 338–352, DOI:10.14429/dsj.58.1653.

Nason GP and Silverman BW (1995). The Stationary Wavelet Transform and Some Statistical Applications, Wavelets and Statistics, Springer, 103, 281–299, DOI: https://doi.org/10.1007/978-1-4612-2544-7_17.

Nejati M, Samavi S, Karimi N et al., (2017). Surface area-based focus criterion for multi-focus image fusion, Information Fusion, 36, 284-295, DOI: https://doi.org/10.1016/j.inffus.2016.12.009.

Panigrahy C, Seal A and Mahato NK (2020). Fractal dimension based parameter adaptive dual channel PCNN for multi-focus image fusion, Optics and Lasers in Engineering, 133, DOI: https://doi.org/10.1016/j.optlaseng.2020.106141.

Petrovic V and Xydeas CS (2004). Gradient-based multiresolution image fusion, IEEE Transactions Image Processing, 13(2), 228-237, DOI: 10.1109/TIP.2004.823821.

Pujar J and Itkarkar RR (2016). Image Fusion Using Double Density Discrete Wavelet Transform, International Journal of Computer Science and Network, 5(1), 6-10.

Radha N and Babu TR (2019). Performance evaluation of quarter shift dual tree complex wavelet transform based multifocus image fusion using fusion rules, International Journal of Electrical and Computer Engineering, 9(4), 2377-2385, DOI: 10.11591/ijece.v9i4.pp2377-2385.

Shah P, Merchant SN and Desai UB (2013). Multifocus and multispectral image fusion based on pixel significance using multiresolution decomposition, Signal Image and Video Processing, 7, 95-109, DOI 10.1007/s11760-011-0219-7.

Sharma A and Gulati T (2017). Change Detection from Remotely Sensed Images Based on Stationary Wavelet Transform, International Journal of Electrical and Computer Engineering, 7(6), 3395-3401, DOI: 10.11591/ijece.v7i6.pp3395-3401.

Shreyamsha Kumar BK (2013). Multifocus and multispectral image fusion based on pixel significance using discrete cosine harmonic wavelet transform, Signal Image and Video Processing, 7(6), 1125-1143, DOI: 10.1007/s11760-012-0361-x.

Suhail MM, Ganeshkumar PV, Balamurugan R et al., (2014). Multi-focus image fusion using discrete wavelet transform algorithm, International Journal of Advanced Research in Computer Science, 5(7).

Wahyuni IS and Sabre R (2015). Wavelet Decomposition in Laplacian Pyramid for Image Fusion, International Journal of Signal Processing Systems, 4(1), 37-44, DOI:10.12720/ijsps.4.1.37-44.

Wang W-W, Shui P-L and Song G-X (2003). Multifocus Image Fusion in Wavelet Domain, Second International Conference on Machine Learning and Cybernetics, IEEE, 5(2), 2887-2890, DOI:10.1109/ICMLC.2003.1260054.

Wang Z and Bovik AC (2002). A universal image quality index, IEEE Signal Processing Letters, 9(3), 81–84, DOI: 10.1109/97.995823.

Wang Z, Bovik AC, Sheikh HR et al., (2004). Image quality assessment: from error visibility to structural similarity, IEEE Transactions on Image Processing, 13(4), 600–612, DOI:10.1109/TIP.2003.819861.

Wang Z, Li X, Duan H et al., (2019). Multifocus image fusion using convolutional neural networks in the discrete wavelet transform domain, Multimedia Tools and Applications, 78, 34483-34512, DOI: https://doi.org/10.1007/s11042-019-08070-6.

Yang G (2014) Fusion of infrared and visible images based on multifeatures Opt. Precis.Eng. Vol 22 No 2 Pages 489–496

Yang Y, Tong S, Huang S et al., (2014). Dual-Tree Complex Wavelet Transform and Image Block Residual-Based Multi-Focus Image Fusion in Visual Sensor Networks, Sensors, 14, 22408-22430, DOI:10.3390/s141222408.

Zhang B, Zhang C, Yuanyuan L et al., (2014). Multi-focus image fusion algorithm based on compound PCNN in Surfacelet domain, Optik, 125(1), 296-300, DOI: http://dx.doi.org/10.1016/j.ijleo.2013.07.002.

Zhang C (2021). Multifocus image fusion using multiscale transform and convolution sparse representation, international journal of wavelets, multiresolution and information processing, 19(1), DOI:10.1112/S02190691320500617.

Zhang C and Feng Z (2022). Convolutional analysis operator learning for multifocus image fusion, Signal Processing: Image Communication, 103, 116632, https://doi.org/10.1016/j.image.2022.116632.

Zou Y, Liang X and Wang T (2013). Visible and Infrared Image Fusion using the Lifting Wavelet, TELKOMNIKA, 11(11), 6290-6295, DOI:10.11591/telkomnika.v11i11.2898.