1Department of Electronics and Electrical Communication, Higher Institute of Engineering, El Shorouk Academy, Cairo, Egypt

2Cairo University and Hail University, Cairo, Egypt

Corresponding author Email: rabie@rabieramadan.org

Article Publishing History

Received: 03/11/2018

Accepted After Revision: 19/07/2018

Telecommunication union launched the so-called (IPTV) as a TV platform provides a certain level of Quality of Service (QoS) and Quality of Experience (QoE.( Based on our experience and up to our knowledge, there is no real implementation to IPTV on one of the famous simulators such as OPNET. This paper is a step forward towards explaining the main components of IPTV to be implemented on OPNET. In this paper, we introduce a detailed study for Internet Protocol Television (IPTV) as a new Internet video entertainment platform and for the benefit of the researchers in this field, we set some of the performance measures. In addition, we propose a two-stage compression technique for enhancing the performance of IPTV. Moreover, we conduct a network performance evaluation of uncompressed video delivery of different data rates in small and large-scale network nodes.

Iptv; Opnet; Performance; Qosqoe

Sabry E, Ramadan R. Implementation of Video Codecs Over IPTV Using Opnet. Biosc.Biotech.Res.Comm. VOL 12 NO1 (Spl Issue February) 2019.

Sabry E, Ramadan R. Implementation of Video Codecs Over IPTV Using Opnet. Biosc.Biotech.Res.Comm. VOL 12 NO1 (Spl Issue February) 2019. Available from: https://bit.ly/2YxfZsi

Introduction

Internet Protocol Television (IPTV) [4] is launched as a new television platform, bounded to appeasement for user’s requirements and choices of great and striking services in terms of offered quality and continuous rejuvenation. IPTV is part of a new breed of services systems providing digital TV access over IP packet-switched transport medium facilitating video entertainment and user’s provision experience offering multimedia life or demanded services according to user’s request. IPTV services are delivered over secure, end- to- end operator managed with desired Quality of Service (QoS) and Quality of Experience (QoE) delivered over Internet Protocol (IP) using set-top box STB for channel access.

Indeed, IPTV delivery is sensitive to packet delay, loss, right order, and time packet arrival affecting the quality of received service. In addition, IPTV last mile speed/bandwidth limits the delivery of the right number of frames per second FPS to deliver moving pictures. In fact, for Standard Definition (SDTV) delivery, it is recommended to have link speed of 4 Mbps per channel while for High Definition (HDTV), it is recommended to have link speed 20 Mbps per channel. Consequently, the quality of delivered service will be reduced and the number of simultaneous TV channel streams will be limited, typically, it reaches from one to three channels per link. However, this could be inadmissible from the perspective of user’s side in terms of QoS and QoE and from the perspective of service providers in terms of efficiency of the available link bandwidth. In reality, broadband speeds improvement and advances in AV compression techniques is the avenue for IPTV technology.

Nowadays, service providers encounter hardly satisfied users with highly progressive video services demanding and other entertainment services as three-dimensional (3-D) movies, games, and high-quality video. Recently ultra-high-definition UHD television (super Hi-vision, ultra HD television, or UHDTV) is introduced involving two digital video formats of 4K UHD (2160p) and 8K UHD (4320p) [15]. These services are approved by International Telecommunication Union (ITU).

In addition, Consumer Electronics Association selects Ultra HD term as an umbrella term after extensive consumer research. They announced, in October 2012, that Ultra HD would be used for the display with aspect ratio 16:9 with a minimum resolution of 3840×2160 pixels. Introducing defiance for television service providers, they recommend strong compression revolution, as it is considered as enabling technology to bridge the gap between the required huge amount of video data and the limited hardware capability.

Typically, ISO in combination with IEC performed MPEG [14] as a set of AV compression standards for the development of new video coding recommendation and international standard. In 2013, ITU succeeded to pass High-Efficiency Video Coding – (HEVC) or H.265 as a new video coding standard built on the ITU-T H.264 / MPEG-4 AVC standards. H.265 opened a future window for video transmission using half of the bandwidth compared to its predecessor H.264. HEVC is developed to have the potential of better performance delivery than earlier standards. It can generate more small size videos compared to its predecessor H.264 generating the same video quality. It has the same basic structure as previous standards as MPEG-2 and H.264 [1][10]. However, it contains many incremental improvements as more picture partitioning flexibility from large to small multiple units, greater flexibility in prediction modes, transformed block sizes, more sophisticated interpolation, and deblocking filters. In addition, it is more sophisticated in prediction, motion vectors and signaling of modes. Also, it supports efficient parallel processing.

In May 2014, ITU JCT-VC released a comparison between HEVC Main profile and H.264/MPEG-4 AVC High profile. The comparison is based on subjective video performance using mean opinion score MOS metric examining a range of resolutions. Their results show that there is 59% average bit rate reduction for HEVC and concluded that the average bit rate reduction for HEVC was 52% for 480p, 56% for 720p, 62% for 1080p, and 64% for 4K UHD.

VP9 [16] is the latest royalty-free video codec format developed by Google. The development of VP9 started in the third quarter of 2011. VP9 reduces the bit rate by 50% compared to its predecessor VP8 while maintaining the same video quality. It is developed with the aim to have better compression efficiency than HEVC. At the same time, VP9 has the same basic structure as VP8; however, it has many design improvements compared to VP8 as supporting the usage of superblocks (64×64 pixels) with quad tree coding structure. Currently, several researchers are interested in the performance comparison between those mentioned video codecs. According to some experimental results (Draft version 2 of HEVC, 2014), the coding efficiency of VP9 was shown to be inferior to both H.264/MPEG-AVC and H.265/MPEG-HEVC with an average bit-rate overhead at the same objective quality of 8.4% and 79.4%, respectively.

Network simulation provides a detailed way to model network behavior through calculations of continuous interactions between modeling devices in their operational environment. Discrete event simulation (DES) is a typical network simulation method that is used in large-scale simulation studies providing more accurate and realistic way. However, DES requires huge computing power and the process could be time-consuming in large-scale simulation studies. OPNET simulator is capable of simulating in both explicit DES and hybrid simulation modes and supports other simulation features like co-simulation, parallel simulation, high-level architecture, and system-in-the-loop interactive simulation. It introduces a huge library of models that simulate most of the existing hardware devices and provide today’s most cutting-edge communication protocols.

The rest of the paper is organized as follows: Section (2) introduces the related work showing the recent relevant IPTV and video compression research papers. Section (3) illustrates the implementation of IPTV networks in small and large nodes number. Section (4) discusses the introduced two-stage compression; section (5) illustrates IPTV network performance characterization; the paper concludes in section (6).

Related Work

Video coding is a huge constant changing field introducing several advances in video compression technology. It is a perfect research field for new ideas and concepts. Hence, researcherstried to evaluate codecs as to acquainted design improvements to find out the most suitable one for offered applications and services. In [9], authors introduce the comparison between H.264/MPEG-AVC, H.265/MPEG-HEVC and Google developed VP9 video codecs encoders. The provided comparison is associated with experimental results using similar encoding configurations for the three examined encoders assuring that H.265/MPEG-HEVC provides significant average bit-rate savings of 43.3% and 39.3% for VP9 and H.264/MPEG-AVC, respectively.

In addition, they inform that at the recommended quality VP9 encoder produces about 8.4% average bit-rate overhead as compared to H.264/MPEGAVC encoder. Results also assure that VP9 encoders are 100 times higher encoding time more than those measured for the x264 encoder. However, in case of comparison to the full-fledged H.265/MPEGHEVC reference software encoder implementation, the VP9 encoding time on average is lower by a factor of 7.35%.

Authors of [2] discuss the most of the existence of alter-native UHDTV encoding mechanisms to satisfy market requirements for UHD content transmission and UHDTVs televisions demand increase. The authors’ discuss the recently introduced VP9 and H.265 video encoding scheme from the perspective of compression efficiency of such encoders aiming to compress video sequences beyond HDTV resolution. They use the most popular and widely spread encoder H.264/AVC to serve as a comparison baseline, showing the actual differences between encoding algorithms in terms of perceived quality. The paper indicates that the comparison of coding efficiency in terms of subjective scores between VP9 and AVC results slightly in favor of AVC (5.1%) and slightly in favor on VP9 (1.59%) in terms of PSNR.

In [13], the authors ensure the importance of the emergence of more efficient next-generation video coding standard that isin high demand at the moment. There seem to be two main contenders for the position of the next state-of-the-art video compression standard: H.265/HEVC and Google VP9. In addition, the authors take the comparison of such introduced codecs from the subjective perspective considering codec’s compression efficiency. Tacitly, they determine compression efficiency by Intra compression, introducing adetailed overview of intra compression data-flow in HEVC and VP9. They indicate that both VP9 and HEVC compression standards provide higher compression efficiency compared to the current industrial video compression standard AVC. They conclude that HEVC provides better compression rates than VP9.

In [7], the authors illustrate that Motion Estimation (ME) is considered as the essential part of almost all video coding standards and thenewly emerged High-Efficiency Video Coding (H.265/HEVC). HEVC achieves better performance in compression and efficiency in coding for beyond HD and UHD videos. They illustrate that the main drawback of HEVC is its computation complexity arises from Motion Estimation (ME). Actually,ME consumes more than half of time to encode. Therefore, the authors proposed the implementation of New Combined Three Step Search pattern for ME algorithm to reduce the complexity of encoding. Their results are compared with the existing patterns and the simulation results show that the average time saving was about 50.07nd slight improvements in quality.

In [11], the authors illustrate the importance of video encoding with the emergence of H.265/HEVC video coding standard as well as 3D video coding for multimedia communications. They provide a comparison between H.265/HEVC and H.264/AVC in terms of video traffic and statistical multiplexing characteristics. They also examined the H.265/HEVC traffic variability for long videos. In addition, they investigated the video traffic characteristics and scalable video encoded statistical multiplexing with the H.264/AVC SVC extension as well as 3D video encoded with the H.264/AVC MVC extension.

Within the recapitulation of previously mentioned results, HEVC is dominant in comparison to other alternatives within wide bit-rates ranges from very low to high bit-rates. Indeed, all previous valuable efforts and communicant codecs comparison between HEVC and/or VP9 with H.264are taken only from the subjective point of view. Comparison judgment lacks actual system applications as to assess application performance suitability in case of video delivery in these codecs format. Nevertheless, the intake comparison lacks actual examination of assessing the impact of different codecs data rates on actual application in both objective and subjective merits. This paper tries to narrow this gap.

IPTV Network Small and Large Scale Configurations

over Opnet

In this section, different components of IPTV that will be implemented using OPNET will be detailed.

IPTV main building elements

Generally, IPTV network contains four main architectural elements that are common to any vendor. A graphical view of a networking architecture used to deliver streamed IPTV channel illustrated in Figure 1.

|

Figure 1: Two-way nature of an IPTV network |

- IPTV Head end: This is the point at which most IPTV channels content is captured and formatted from different broad casters to be distributed over the IP network. Typically, the head end ingests national feeds of linear programming via satellite or terrestrial fiber-based network. It may be made up of several content servers; each is responsible for the certain type of data preparation and services for the full management of massive offered IPTV services. Broadcasting TV streaming servers are responsible for streaming live IPTV content, and IP Video on Demand (VoD) application server is responsible for data storing and caching services. On the other hand, IPTV head end middleware and application servers are responsible for provisioning management of new subscribers, billing, and overall video assets management. In addition, network time server is responsible for internal clocking systems to allow synchronization between network components.

- Service Provider Core/Edge Network: This is the point at which previously prepared data representing the channel line-up could be transported over the service provider’s IP network. Actually, this network could be a mix of a well-engineered existing IP network and other purposed built IP networks for video transport. At the network edge, the IP network is connected to the access network. However, each of these networks is uniqueto the service provider and usually includes equipment from multiple vendors.

- Access Network: This could be considered as the service provider link to the individual household user,referred to what is called “the last mile”. It is the broadband connection between theservice provider and household that could be various technologies based as DSL (digital subscriber line) or fiber PON(passive optical networking) technology. However, IPTV networks use variants ofasymmetrical DSL (ADSL) and very-high-speed DSL (VDSL) to run an IPTV service to the household. In this case, the service provider places aDSL modem at the customer premises to deliver an Ethernet connectionto the home network [8].

- Home Network:It is network responsible for IPTV service distribution throughout the home; manydifferent types of home networks exist. However, IPTV requires a very robust high bandwidth network that can only be accomplished today using wireline technology. This is the endpointto which the television set is connected via aset-top box(STB) [3][6].

IPTV Content Flow

IPTV Content flow is defined as the media transfer from one functional area to another including media capturing, compression, packetization, transmission, packet reception, decompression, and decoding of the received back media signal into its original form.

Figure 2 illustrates a graphical overview showing main IPTV content flow block diagram. A head-end takes each individual channel and encodes it into a digital video format like H.264/AVC, which remains the most prevalent encoding standard for digital video on a worldwide basis.

After encoding, data is packetized through dividing data files or data blocks into fixed size data blocks of compressed packets. Each channel is addressed and encapsulated into IP to be sent out over the network. These channels are typically IP multicast streams. However, certain vendors make use of IP unicast streams as well. IP multicast has several perceived advantages in which it enables the service provider to propagate one IP stream per broadcast channel from the video head end to the service provider access network. This is beneficial when multiple users want to tune in to the same broadcast channel at the same time (e.g., thousands of viewers tuning in to a sporting event). Hence, compression performs the key technology as to make IPTV in reality. Compression is the reduction process for the required storage space of digital information by taking advantage of deficiencies in both human and aural systems [6].

|

Figure 2: Simplified IPTV flow content |

IPTV architecture over OPNET

This section is dedicated to the IPTV architecture over OPNET. This section is written to be a reference for any farther implementation to IPTV on OPNET. The architecture has the following components.

- IPTV Hardware: Form previously mentioned architecture and content flow, common nodes are recommended for IPTV network implementation via any network simulator. In two different project editors, IPTV network is created with a small number of network nodes as shown in Figure 3 and with a large number of network nodes as shown in Figure 4. The networks have been created using the same modeled links speed/bandwidth, modeled nodes, and tested using the same machine.

Within both project editors, IPTV_Headend_Video node acts as IPTV video content source node within all created scenarios for all types of imported traffic. DSLAM and level3 act as interface nodes to the source node as in Figure 3 and in Figure 4 networks, respectively.

|

Figure 3: Small-scale IPTV network |

|

Figure 4: Large-scale IPTV network |

Tacitly Rendezvous Point (RP) router node presented in both project editors acts as multicasting node, which could be a router or an edge node. Multicast domain packets from the upstream source and joined messages from the downstream routers are considered as “rendezvous”. The most important issue that must be taken into consideration is, in case of RP configuration, all other network’s routers do not need to know the source address of every multicast group. However, RP must be declared at each router, where the declaration is performed through their knowledge of RP address. RP addressing is performed through IP addressing of any active RP interface. The rest of the architecture nodes in Figure 3 and Figure 4 are switches and TV sets.

- IPTV Protocols: IPTV protocols are configured to provide tightly management, routing, and controlling for video packets delivery over the network. From the perspective of routing, configured routing protocol differs as if network nodes are localized within the same network partition or whether the network is managed by the same Autonomous System AS (intra-AS routing protocols) or between ASs (inter-AS routing protocols). In both networks all nodes are assumed to be localized within the same partition; hence routing information protocol RIP and/or open shortest path first OSPF could be configured. Using OPNET simulator, various routing protocols could be easily configured for modeling several technologies. For traffic delivery as mentioned previously IPTV could be unicasted or multi casted; however, IP multicasting is widely used.

OPNET modeler supports IP multicast including Internet group management protocol IGMP and Protocol Independent Multicast-Sparse Mode (PIM-SM). IGMP used by hosts and adjacent routers to establish multicast group memberships. A TV set transmits IGMP-join/leave messages to notify the upstream equipment by LEAVE-ing one group and Joining another channel. PIM-SIM is multicasting routing protocol that explicitly builds shared trees rooted at an RP per group and optionally creates shortest-path trees per source. Multicasting protocols are configured to handle data controlling and management [5][12].

Two-Stage Compression

Typically there is no fixed meaning for low latency achievement, it is anapplication dependent. However, it is a design goal for any system especially in real-time interaction with video content. It is counted from the instant time a frame is captured to the instant time the frame is displayed. Accounted delays for video frame trip includes processing time delay arises from making the pixels captured by a camera in combination with required transmitting time for compressed video stream defining what is called end-to-end ETE delay.

However, the biggest contribution for video latency is the processing stages requiring temporal data storage. Moreover, tradeoffs arise between low latency achievement and the optimum balance of hardware, processing speed, transmission speed, and video quality. Hence, system video engineers tend to measure latency in terms of video data buffering. Therefore, any temporary storage of video data (uncompressed or compressed) increases latency, so reducing buffering is a good primary goal.

Indeed, new introduced HEVC achieves about 50% lower storage capacity than its predecessor H.264. This section endeavors to bring H.264 closer from the perspective of compression ability to H.265 without much loss. The suggested procedure is to increase the compression ratio for the H.264 coded video file is illustrated in Figure 5. The uncompressed video file passes through two level compression stages achieving more buffering reduction and investigating Human Visual System (HVS) perception.

|

Figure 5: Two stage compression block diagram |

Figure 5 illustrates that the input video file pictures are firstly divided into separated frames, and then switched to the first compression stage. The next question is what about video quality, and which compression type could be used? Wavelet 2D video compression is used as the first compression stage. Wavelet transform decomposes a signal into a set of basic functions called wavelets that maps a time and spatial function into a 2-D function.

Generally, Wavelets are a time domain windowing function; the simplest of which is a rectangular window that has a unit value over a time interval and zeroes elsewhere. Wavelets have zero DC value and good time localization. Also,they decay rapidly toward zero with time; hence, wavelets are usually bandpass signals. Wavelet compression produces no blocking artifacts and can use very small wavelets to isolate very fine details in a signal; while very large wavelets can identify coarse details. These characteristics of the wavelet compression allow getting the best compression ratio while maintaining the quality of the images. Therefore, wavelets provide high compression ratio, better image quality without much loss. So, first compression stage is performed by 2D- Discrete Wavelet Transform DWT using OPNET.

Haar Wavelet Transform (HWT) is deployed taking the motivations behind it as it achieves the best performance in terms of computational time, high computational speed, simplicity, efficient compression method, and efficient memory since it can be calculated in place without a temporary array. According to the chosen energy level of each frame, the signal is analyzed into four levels with assigning of about 99.23% of retained energy threshold using balance sparsity-norm as to try to preserve quality then thecompression is performed. Then all compressed frames are reassembled to form a video file that passes through the second compression stage using the well-known H.264 standard.

To verify our proposal in this section, we implemented the two-stage compression based H.264 using MATLAB. This module will be later implemented in OPNET for comparison purposes.

Two video test sequences undergo theexperimental test; the first video is of size 934MB,07:06 minuteslength, 30 frames/sec, and .avi extension. Using H.264, the produced compressed with 77.5% compressions and a length of 210MB. The same video is divided into12802 images and compressed using the prescribed modified H.264 format and the H.264. The resultingvideo isof size 45MB and length 07:06 minutes achieving 95.2% with respect to original video and of 78.6% with respect to compressed H.264.

The second used video is of size 704MB of .mov extension, length 12:14 minutes, and 24 frames/sec. In this video, H.264 achieves a compressed video of length 81MB achieving 88.5% compressed video with respect to original video. This video is divided into17620 images which compressed using the prescribed method resulting video of size 56.9MB and length 12:14 minutes in H.264 format achieving 92% with respect to original video and of 70.24% with respect to original video.

In addition, quality of the compressed videos is slightly changed. This could be realized by comparing Figures 6, 7, and 8. Figure 6 shows the original image frame, Figure 7 shows the first level compressed image frame, and Figure 8 shows new modified recovered H.264 image frame. Therefore, this suggested wiseincreases video file compression ratio approximately about 70% as compared to the well-known H.264 with the expense of slightly video quality change associated with minor computational processing, we are aiming to have better network performance from the perspective of objective merit enhancing the QoS.

|

Figure 6: Original image |

|

Figure 7: First level compressed image |

|

Figure 8: New modified H.264 recovered image |

IPTV Network Performance Measures

According to IP downstream bit rate and video indigence numeration, IPTV could be considered as a key component to service provider growth. Hence, with such significant financial rewards, service providers take IPTV QoE very seriously. QoE denotes to how well the offered video service will satisfy users’ expectations. Indeed, experienced IPTV quality by subscribers must be equal to or better than today’s cable and satellite TV services or in turn service providers will run the risk of significant subscriber churn and the resulting loss in revenue. QoE consists of subjective and objective quality merits. The overall objective effect on IPTV service arises from network performance defining what is called QoS.

IPTV traffic will share network links from the same multi-play subscriber or from other subscribers sharing an uplink from an aggregation device. All IPTV services will contend for finite network bandwidth and equipment resources; shortly, there is an existence of a correlation between objective and subjective merits. Hence, quality of received service could be expected according to network performance collected QoS parameters. In addition, in terms of IPTV, Media Delivery Index (MDI) define measurements set for monitoring and troubleshooting networks carrying any IPTV traffic. MDI defines two merits which are media Delay Factor (DF) and Media Loss Rate (MLR), identifying required buffer size or inter-arrival time of IP packets and counting the number of lost MPEG packets number per second, respectively. Both DF and MLR are translated directly into networking terms: jitter and loss. Regarding the Internet services, QoS parameters usually defined as the following:

- Packet End-to-End (ETE) delay (sec): Defines the average time required to send a video application packet to a destination node application layer including network node processing delay, queuing delay, the packet transmission time between two network elements, and the propagation delay within network link. If ETE delay is less than 1 sec, so it is considered the worst QoS toward end-user QoE. Moreover, the acceptable delay should be less than 200ms. If the maximum delay equals to 250ms, the received video will be tolerable. Therefore, the conclusion is that the delay is not acceptable if it is greater than 400ms.

- Packet jitter (sec): Measures the differences between two consecutive packets End to End delays. The average jitter could be considered acceptable if less than 60ms and could be considered ideal value if it is fewer than10ms.

- Throughput (bps): Counts the average number of the successfully received bits by the receiver node per second. The minimum acceptable video transmission rate is of range 10Kbps and 5Mbps.

- Packet delay variation (PDV): Illustrates the differences between ETE that video packets will experience in OPNET collects PDV statistic at two levels which are Global PDV recording collected from all network’s nodes and/or Node PDV recording data received by the specific node.

- Point-to-Point (P2P) Queuing delay (sec): Measures instantaneous packet waiting times in the transmitter channel’s queue that are counted from packet entering time at transmitter channel queue to the time the last packet’s bit is transmitted.

Case Study: Network Performance Evaluation Subjected to Uncompressed Video Delivery of Different Data Rates in Small and Large-Scale Network Nodes

The main purpose of this case study is to compare the network performance in two different scales subjected to different data rates. Hence, this experiment could identify the most dominant factor affecting the IPTV system performance. Using the same network setup in Figures 3 and 4 with the modification of defined video applications frame ratings to the following:

- Video Conferencing (AF41) frame inter arrival time is reduced to 15FPS,

- Video Conferencing (AF32) frame inter arrival time is reduced to 10 FPS.

- The rest of other network configurations remain unchanged.

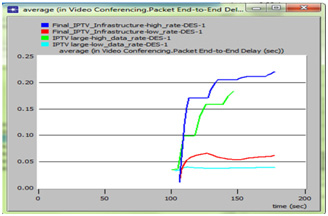

The experiment is a comparison-based between network performance in terms of ETE delay (sec), PDV, traffic received (bytes/sec), P2P throughput (bps), and P2P utilization QoS terms.

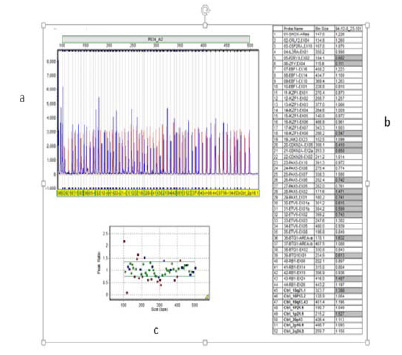

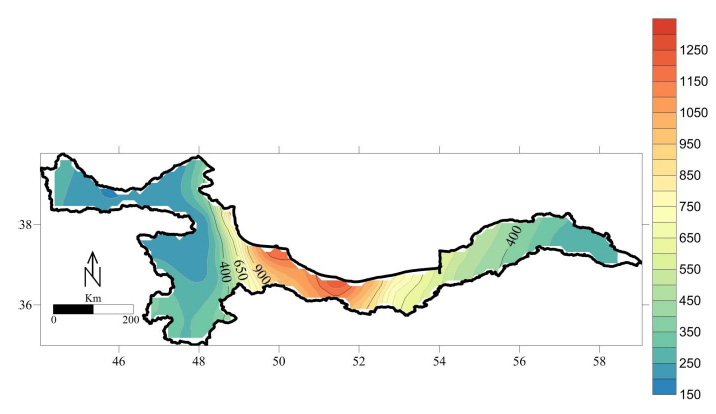

Figure 10 illustrates the global PDV collected from all network nodes within two created networks of small and large node numbers subjected to different data rates video channels delivery. The Figure shows that in case of video channel delivery at a high data rate in both networks, video packets experience higher PDV more than that of low data rates. However, PDV shows picks through the small-scale network while it is extremely constant with time over large scale network.

|

Figure 9 |

|

Figure 10: Global collected PDV in case of high and low data rate video channels delivery over small and large network nodes number |

Figure 10 also depicts that the video channels of high frame rates over a small-scale network have higher PDV than of that over the large-scale network by approximately 0.0034. On the other hand, videos of low frame rates over small network experience higher PDV than of that over the large-scale network by approximately 0.0005. That means, either for high or low data rates video packets over the small-scale network are more vulnerable to be a receipt in out of order than of their corresponding over the large-scale network.

Figure 11 illustrates the global ETE delay (sec) collected from all network nodes within two created networks of a small and large number of nodes subjected to different data rates. The Figure illustrates that those video channels of high data rate over both networks have higher ETE delay than their corresponding to low data rates over the same network. The figure comprises also of video channels of high data rate showing higher picks than their corresponding to low data rates. However, increasing the number of nodes seems to reduce ETE delay in high data rates channels over the small-scale network by approximately 75ms, and almost about 25ms for those of low data rates. Hence, we conclude that increasing of a number of nodes (routers and switches) reduces both PDV and ETE delay (sec) for video channels at different data rates.

Since it could be easy to expect achieved the quality of received video channels as there is a correlation between subjective and objective merits. So evaluating achieved ETE delay included in Figure 10 with acceptable values mentioned in section (5), which infers that video channels that experience delay setting in the range of 100ms± {1, 2, 3, 4} will have a lower impact on user’s perception quality. While those experience ETE delay beyond 100ms ± 16ms, users will have very annoying received quality. Moreover, it involves that maximum ETE delay must not exceed 200ms.

Figure 10 involves that video channels of high and low data rate over the small-scale network will experience maximum ETE delay of 220ms and 60ms respectively. While those over the large-scale network will experience maximum ETE delay of 200ms and 40ms respectively. Hence, users receiving video packets of low data rate are expecting to acquire better video quality than those of high data rate over both networks. Users expecting video channels of high data rate over the large-scale network will have better video quality than those over small-scale network. The same dialectics will be assured from collected PDV involved in Figure 11 as high data rate video channels packets are more vulnerable.

Practically, telecommunication field became a highly competitive field with the advances in compression and IP technologies as to satisfy great consumer’s choices. According to previous experimental results, data rates are considered the basic engine affecting the quality of the service provided. Hence, compression techniques is a must to satisfy providers recommendations in serving a huge number of customers and to increase the number of transmitted channels over limited bandwidth. The contents size are reduced via such compression techniques with the removal of the strong correlation existed between resemblance neighboring frames.

Conclusion

Telecommunication field is a highly competitive field; IPTV system is new TV platform that is drawing admission because of its superb and emphatic features acquainted in terms of QoE and QoS. This paper provides network assessment for IPTV technology common management and control protocols in their operational mode at different data rates over different networks scales. The provided assessment examines the most important dominant key technology which has a great impact on achieved network performance affecting both QoS and QoE. The paper also proposed two-stage compression techniques for better network performance. The results of the two-stage compression techniques are promising. In addition, the paper set the base for IPTV implementation in OPNET.

References

- Draft version 2 of HEVC, 2014, High-Efficiency Video Coding (HEVC) Range Extensions text specification: Draft 7, JCT-VC document JCTVC-Q1005, 17th JCT-VC meeting, Valencia, and Spain.

- Grois, Dan & Mulayoff, 2013, Performance Comparison of H.265/MPEG-HEVC, VP9, and H.264/MPEG-AVC Encoders, at 30th Picture Coding Symposium, San José, CA, USA, Dec 8-11, © IEEE.

- Harte, Lawrence,2008, IPTV Testing: Service Quality Monitoring, Analysis, and Diagnostics for IP Television Systems and Services, New York, USA.

- Held, Gilbert, 2007, Understanding IPTV, New York, USA.

- LU, Zheng, & Yang, Hongji, 2012, Unlocking the Power of OPNET Modeler, New York, USA.

- O’Driscoll, Gerard, 2008, next-generation IPTV services and technologies, New Jersey, USA.

- P, Davis &Marikkannan , Sangeetha, April 2014, Implementation of Motion Estimation Algorithm for H.265/HEVC, International Journal of Advanced Research in Electrical, Electronics and Instrumentation Engineering, Vol. 3.

- Rahman, Md. Lushanur & Lipu, Molla Shahadat Hossain, 2010, IPTV Technology over Broadband Access Network and Traffic Measurement Analysis over the Network”, in International Conference on Information, Networking and Automation (ICINA), paper V2-303.

- Rerabek, Martin & Ebrahimi, Touradj, 2014, Comparison of compression efficiency between HEVC/H.265 and VP9 based on subjective assessments”, Multimedia Signal Processing Group (MMSPG), Ecole Polytechnique F´ed´erale de Lausanne (EPFL), Lausanne, Switzerland.

- Schierl, Ta, Hannuksela, M b, Wang, Y. K c, & Wenger S*, 2012, System layer integration of high-efficiency video coding, IEEE Transactions on Circuits and Systems for Video Technology, vol. 22, no.12, pp. 1871–1884.

- Seeling, Patrick & Reisslein, Martin, 2014, Review Article Video Traffic Characteristics of Modern Encoding Standards: H.264/AVC with SVC and MVC Extensions and H.265/HEV’, Hindawi Publishing Corporation Scientific World Journal, Article ID 189481, 16 pages.

- Sethi, Adarshpal S & Hnatyshin, Vasil Y,2013, the Practical OPNET® User Guide for Computer Network Simulation, New York, USA.

- Sharabayk, Maxim P a, Sharabayko, Maxim P b, Ponomarev, Oleg G c. & Chernyak, Roman I, 2013, Intra Compression Efficiency in VP9 and HEVC.

- Wiki MPEG, 2015 [Online] Available at:

- http://en.wikipedia.org/wiki/Moving_Picture_Experts_GroupWiki UHD, 2015, [Online]Available at:

- https://en.wikipedia.org/wiki/Ultra-high-definition_televisionWikipedia, 2015 [Online] Available at: https://en.wikipedia.org/wiki/VP9